Master Ai text to speech for video games with our step-by-step guide. Improve player experience with dynamic and realistic in-game dialogue!

Introduction to AI Text-to-Speech Technology in Gaming

AI text to speech for video games is teaming up with game development in some pretty cool ways these days. What used to be just a dream – computer voices that sound natural, express emotions, and bring game characters to life – is now something developers use all the time.

Back in the early days, game audio was just simple beeps and basic sound effects. Then we moved to text boxes for dialogue. Now look where we are! Voice technology has become just another tool in many game developers’ toolkits. Whether you’re making games in your bedroom or working at a big studio, these technologies are changing how we create immersive experiences from start to finish.

The best part? You don’t need a technical background to use this stuff anymore. Lots of powerful AI voice generation tools come as affordable software or even built into game engines that anyone can try out.

This whole shift brings up some interesting possibilities for game creation. When an AI helps voice hundreds of NPCs or creates dynamic dialogue on the fly, games can become more alive and responsive than ever before. Most developers find that AI works best as a helper rather than doing everything itself – it adds to creativity instead of taking over.

In this guide, we’ll walk through how these text to speech gaming tools work through the whole game development process – from writing your first lines of dialogue to getting that final polished voice ready for players to hear. Whether you make games yourself, like tech stuff, or just wonder how computers can help create more immersive worlds, this look at AI-powered voice for video games shows where things are headed in this fast-changing field.

Benefits of Implementing AI Text-to-Speech in Games

Enhanced Player Immersion and Engagement

When characters in your game can actually talk instead of just showing text boxes, the whole experience feels more real. AI voiceover for video games helps players connect with your game world in a way that’s hard to achieve with just text.

Think about it – a shopkeeper who greets you by name and remembers what you bought last time feels like an actual person rather than just a menu with some text. When that NPC has a voice that sounds natural and fits their character, players get pulled into your world even more.

Even better, these voices can change based on what’s happening in the game. A friendly villager might sound scared during a monster attack, or a mentor character might sound proud when you accomplish something difficult. This kind of dynamic voice work was super expensive with human actors, but AI makes it possible for games of all sizes.

Accessibility Improvements for Diverse Audiences

Gaming accessibility gets a big boost with AI voices. Some players have trouble reading small text or reading quickly enough during gameplay. Others have visual impairments that make text-based games hard to enjoy. With voice technology, these players can finally experience your game the way you intended.

This technology also helps players who might not be comfortable with the language your game is written in. Someone learning English might struggle with reading quest text but could understand the same information when it’s spoken aloud. By adding AI voices, you’re opening your game to a much wider audience.

Cost and Resource Efficiency vs. Traditional Voice Acting

Let’s talk about the elephant in the room – professional voice acting is expensive! For indie developers or even mid-sized studios, voice generation for NPCs using AI can save tons of money while still giving players a voiced experience.

Here’s a simple comparison:

- Professional voice actor: Around $200-500 for an hour session (which might cover 100-200 lines)

- AI voice generation: Often less than $0.05 per line with unlimited revisions

This means you could voice an entire RPG with thousands of lines for the cost of hiring just one or two human actors for a few hours. Plus, when you need to change dialogue or add content later, you don’t need to book expensive re-recording sessions.

Customizable Player Experiences and Adaptive Gameplay

One of the coolest things about AI text to speech for games is how it lets your game adapt to players. With traditional voice acting, you’re stuck with whatever lines you recorded. But with AI, your dialogue can change based on:

- Player choices and game history

- Time of day or season in the game

- Character relationships and reputation

- Random events or procedurally generated content

This interactive storytelling approach gives players something unique every time they play, making your game feel alive and responsive.

Technical Implementation Foundations

Understanding AI Voice Generation Technology

So how does this stuff actually work? At its heart, game voice generation uses smart computer systems trained on real human speech to create voices that sound natural.

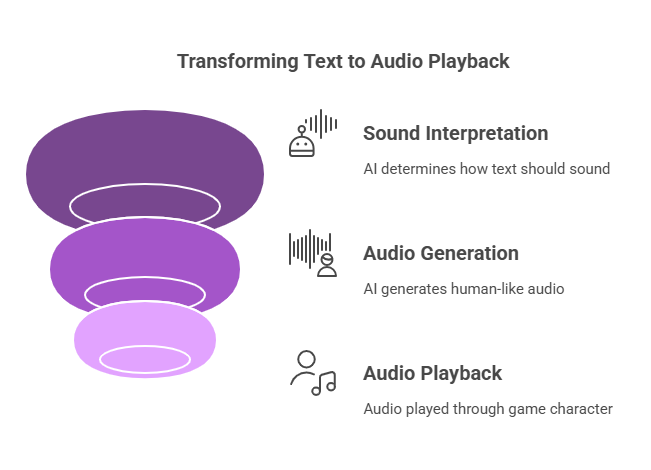

The basic process goes something like this:

- Your game sends some text to the AI system

- The AI figures out how that text should sound (including pauses, emphasis, question marks, etc.)

- It generates audio that matches what a human would sound like saying those words

- Your game plays that audio through a character or narrator

Modern systems are pretty amazing – they can understand context, add the right emotional tone, and even include natural elements like breathing or slight hesitations that make the voice sound real. The best ones are getting harder to tell apart from actual human recordings.

Required Technical Setup

Before you jump into adding AI voices to your game, you’ll need a few things in place:

- A way to manage your dialogue text (most games already have this)

- Audio playback features in your game (again, pretty standard)

- Some extra memory for processing voices

- Either internet connection (for cloud AI services) or enough processing power (for standalone solutions)

None of this is particularly complicated – if your game can already play sound effects, you’re most of the way there already!

Selecting the Right AI Voice Solution for Your Project

When picking an AI voice solution for games, think about:

- How much money you can spend

- Whether your game needs to work offline

- How natural you need the voices to sound

- How many different voices you need

- Which languages you want to support

Some popular options include:

- Microsoft’s Azure AI speech for games

- Amazon’s Polly

- Google’s Text-to-Speech

- Smaller specialized companies like ReadSpeaker or Replica Studios

Each has its own strengths, pricing, and integration methods. For beginners, the big companies (Microsoft, Amazon, Google) usually offer the smoothest experience with good documentation, while specialized services might give you more unique-sounding voices.

Platform-Specific Implementation Guides

Unity Integration Step-by-Step Guide

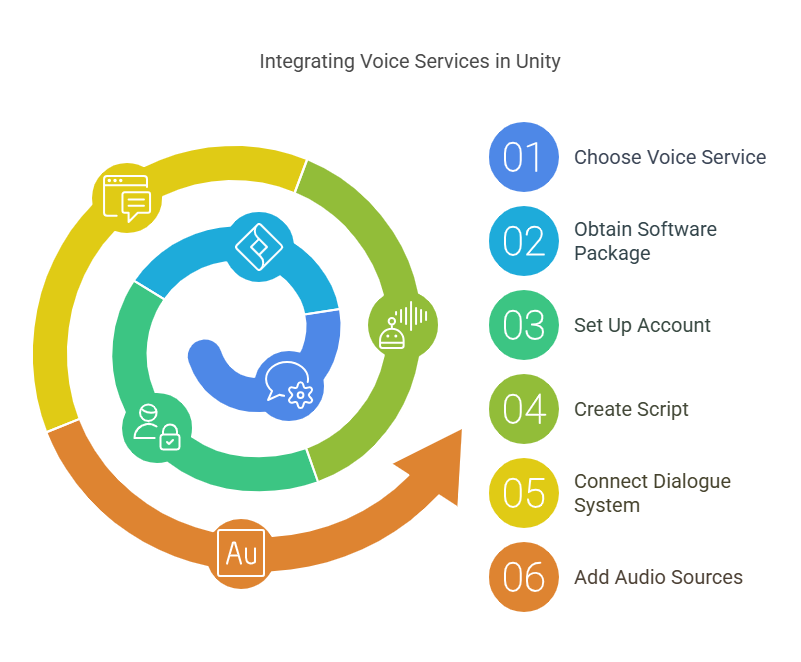

Adding voices to Unity games is pretty straightforward:

- Pick your favorite voice service

- Get their software package (Unity integration)

- Set up your account and get your access keys

- Create a simple script to handle voice generation

- Connect your dialogue system to this script

- Add audio sources to your characters

- Test and adjust until it sounds right

The great thing about Unity is how many tutorials and examples exist online. Once you’ve got your basic setup working, you can usually find help for any specific challenges you run into.

Unreal Engine Implementation Process

For Unreal games, the process follows a similar pattern:

- Get the plugin for your chosen voice service

- Set up the connection with your account details

- Create a Blueprint that handles sending text and receiving audio

- Connect this to your existing dialogue system

- Test with different characters and scenarios

Unreal’s visual Blueprint system makes this particularly nice for non-programmers, as you can often set things up without writing much (or any) code.

Mobile Game Voice Implementation Strategies

When adding AI text to speech for games on phones and tablets, keep in mind:

- Battery life (voice processing can be power-hungry)

- Data usage (if using cloud services)

- Storage space (if pre-generating voice files)

- Performance on older devices

For mobile games, a good approach is often to pre-generate voices for common dialogue during loading screens or game installation, then only use real-time generation for dynamic or unexpected content.

Web-Based Games Voice Integration

For games that run in browsers, you’ve got several options:

- Use the browser’s built-in speech features (simpler but less flexible)

- Connect to cloud voice services through JavaScript

- Pre-generate audio files for common dialogue

Browser games face some extra challenges around user permissions (browsers often require a user click before playing audio) and compatibility across different browsers and devices.

Advanced Voice Customization and Optimization

Voice Customization Options and Parameters

Modern neural voice game dialogue systems let you tweak all sorts of settings:

- How high or low the voice sounds

- How fast or slow they talk

- How much the pitch varies (monotone vs. expressive)

- Where to put emphasis on certain words

- How to pronounce unusual words or names

- Adding emotional styles (happy, sad, scared)

- Including breathing, sighs, or other human sounds

Playing with these settings is like being a virtual voice director, helping your AI voices deliver their lines in just the right way.

Creating Distinctive Character Voices with AI

To make characters that players will remember:

- Start with a voice that generally matches the character’s age, gender, and background

- Adjust the speaking speed (slower for thoughtful characters, faster for excited ones)

- Change how much pitch variation they have (more variation for expressive characters)

- Add in character-specific speech patterns or verbal habits

- Make a list of words they pronounce in unique ways

These small touches add up to create characters that feel different from each other. Even with just a few base AI voices, you can create dozens of distinctive characters through customization.

Emotion and Expression in AI-Generated Game Dialogue

One of the biggest improvements in recent text to speech gaming technology is emotional range. Modern systems can express feelings by:

- Using special markup tags in your text to indicate emotion

- Applying emotional presets (like “cheerful” or “sad”)

- Changing voice parameters based on the situation

- Adding appropriate pauses and emphasis

For example, you might mark up dialogue like this: “I’m [happy] so glad to see you again! [/happy] [worried] But we need to hurry, they’re coming! [/worried]”

The AI would then adjust the voice to match each emotional state as it reads the line.

Performance Optimization and Resource Management

To keep your game running smoothly with all these voices:

- Save common phrases so you don’t have to generate them repeatedly

- Process voices during loading screens when possible

- Use higher quality for important characters and simpler voices for background NPCs

- Have a system to decide which dialogue is most important when multiple characters might speak

- For longer speeches, consider streaming the audio rather than waiting for it all to generate

These optimizations help make sure your game stays responsive even with lots of voiced characters.

Azure AI Speech for Game Development

Azure Speech Service Capabilities for Games

Microsoft’s Azure AI speech for games is one of the most popular options, offering:

- Over 400 voices across more than 140 languages

- Really natural-sounding neural voices

- Options to create your own custom voices

- Both immediate and batch processing

- The ability to recognize player speech too (for voice commands)

- Translation features for multilingual games

Implementation Process with Azure Speech

Getting started with Azure is pretty simple:

- Sign up for an Azure account and create a Speech resource

- Make note of your key and region information

- Get the software package for your game engine

- Set up your account details in your game

- Create a function that sends text and receives audio

- Hook this up to your dialogue system

The actual implementation is straightforward – you send text to Azure, and it sends back audio that your game can play. Most of the complexity comes in how you integrate this with your existing gameplay systems.

Prebuilt vs. Custom Neural Voices in Azure

Azure gives you two main options for voices:

Ready-made voices:

- Available immediately

- Don’t require any special setup

- Come in lots of languages and styles

- Cost less

Custom voices:

- Created based on recordings you provide

- Unique to your game

- Cost more and take time to set up

- Give your game a more distinctive sound

For most small to medium-sized games, the ready-made voices with some customization work perfectly well. Custom voices make more sense for bigger productions or when you want a truly unique sound.

Game Dialogue Prototyping with Azure AI

During development, Azure’s tools are super helpful for:

- Testing how dialogue sounds with different voice options

- Experimenting with emotional delivery

- Creating temporary voice tracks while finalizing your script

- Quickly updating audio when writers change dialogue

This speeds up the feedback loop for your narrative team and helps catch issues before they become problems.

Practical Use Cases and Applications

NPC Dialogue Systems with AI Voices

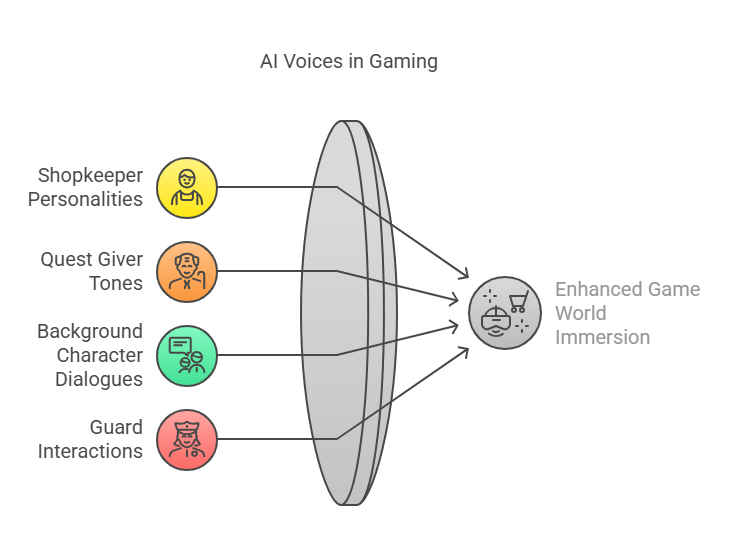

AI voice actors for games are perfect for bringing your characters to life:

- Shopkeepers with distinct personalities who comment on your purchases

- Quest givers who adjust their tone based on how important or dangerous the quest is

- Background characters who chat about the world or react to player actions

- Guards who remember you and greet you differently based on your reputation

The best part is you can give every single character a voice, even minor ones who might only have a few lines. This makes your world feel much more complete and alive.

Quest Guidance and Tutorial Systems

Voice-enabled tutorials make learning your game much easier:

- Explaining how to play while players keep their eyes on the action

- Giving hints during tricky parts without making players pause to read

- Providing direction that feels like a helpful friend rather than a manual

- Reading quest details aloud for better understanding

Players are much more likely to understand and remember instructions they hear while playing, compared to text they have to stop and read.

Interactive Conversational Gameplay Mechanics

Some clever games use AI voices for unique gameplay:

- Detective games where vocal cues help you determine if someone’s lying

- RPGs where your dialogue choices affect how NPCs speak to you

- Life sims where building relationships changes how characters talk to you

- Horror games where voice tone creates suspense and fear

These mechanics simply wouldn’t work without voiced dialogue, and would be prohibitively expensive with traditional voice acting.

Procedural Content Generation with Voice

The really exciting frontier is combining AI text to speech for video games with procedural generation. Imagine:

- RPGs that generate unique quests with fully voiced NPCs

- Strategy games where advisors comment on the specific situation at hand

- Simulation games where characters discuss events that just happened in your specific playthrough

- Adventure games where the narrator describes your unique choices and their consequences

This kind of dynamic, responsive content gives games nearly endless replay value since no two playthroughs sound exactly the same.

Testing and Quality Assurance

Voice Quality Assessment Methods

Once you’ve implemented voices, you’ll want to make sure they sound good:

- Listen to samples with different headphones and speakers

- Check how voices sound during actual gameplay (with music and effects)

- Test different emotional states for each character

- Have people unfamiliar with the script listen and give feedback

- Compare different voice services or settings side-by-side

Remember that “good enough” is often the right target – players generally don’t expect indie games to have AAA voice acting quality, and will appreciate the effort of including voices at all.

Common Issues and Troubleshooting Strategies

When things go wrong, check for these common problems:

- Pronunciation issues with unusual names or terms (create custom pronunciations)

- Voices cutting off too quickly at the end of sentences (add a small pause)

- Emotional mismatch between text and delivery (check your emotion markup)

- Voices sounding too similar (adjust customization parameters)

- Audio quality issues (check your processing and playback settings)

Most issues have straightforward fixes once you identify the problem.

User Testing and Feedback Integration

The ultimate test is putting your game in front of real players:

- Watch how they react to different characters

- Ask which voices they find most and least believable

- See if they understand instructions delivered by voice

- Note any dialogue they have trouble understanding

Player feedback is invaluable for fine-tuning your voice implementation. Often, what developers think sounds best isn’t what players prefer.

Future Trends and Advanced Applications

Emerging Technologies in AI Voice Generation

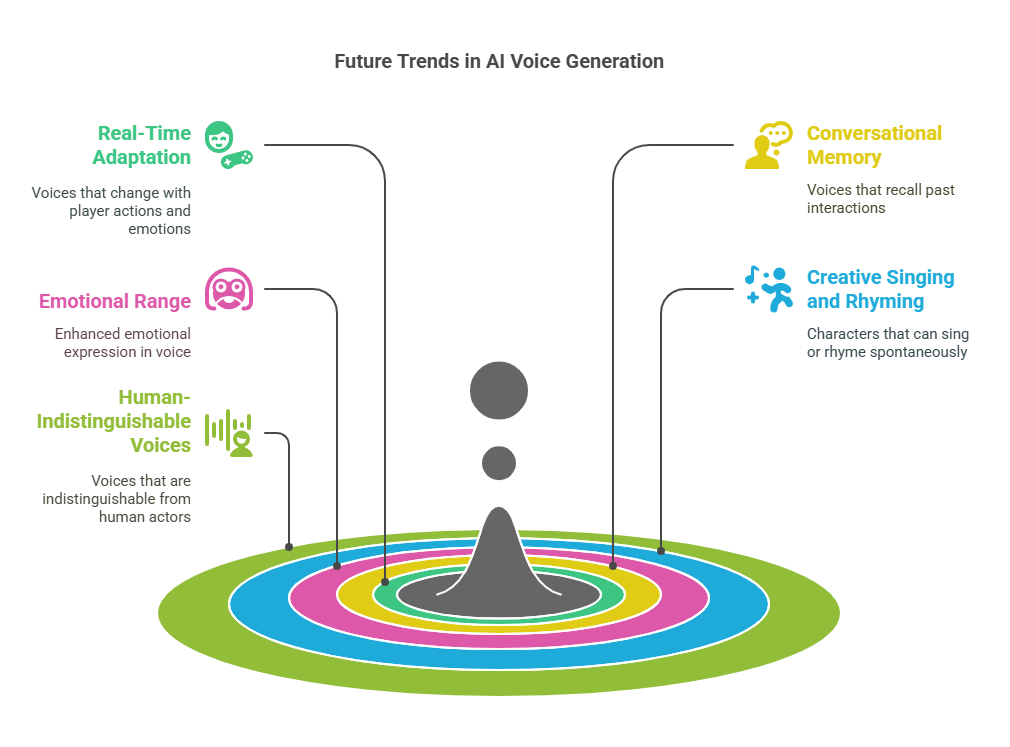

The future of AI text to speech for games looks amazing, with technologies like:

- Real-time adaptation to player actions and emotions

- Voices that remember past conversations and adjust accordingly

- More convincing emotional range and subtle expressions

- Characters who can sing or create rhymes on the fly

- Voices indistinguishable from human actors

These advances will make games even more immersive and responsive to players.

Multi-Modal AI Integration (Voice, Animation, Behavior)

The next big leap is connecting voice with other systems:

- Characters whose facial animations match their voice perfectly

- NPCs whose body language complements their tone of voice

- AI that generates both dialogue and appropriate voice delivery

- Characters who respond to player voice input with appropriate emotions

This integration creates characters that feel completely alive and responsive.

Ethical Considerations and Best Practices

As with any technology, there are important considerations:

- Getting proper permission when basing AI voices on real people

- Clearly labeling AI-generated content in marketing materials

- Being respectful in how different accents and speech patterns are portrayed

- Considering the impact on professional voice actors’ livelihoods

- Providing options for players who may find certain voices triggering or uncomfortable

Responsible use of the technology helps the whole industry move forward positively.

Implementation Case Studies

Indie Game Success Stories with AI Voice Integration

Small teams are doing amazing things with AI voices:

- Text-heavy RPGs becoming fully voiced adventures

- Visual novels with distinct voices for every character

- Simulation games where hundreds of NPCs have unique voices

- Roguelikes where narration changes every run

These indie developers often report increased player engagement, better reviews, and stronger emotional connection to their games after adding AI voices.

AAA Implementation Examples and Lessons Learned

Bigger studios are using AI voice in interesting ways:

- For rapid prototyping before hiring voice actors

- Creating background NPC chatter that responds to game events

- Voicing content updates and DLC without bringing back the original cast

- Handling procedurally generated content that couldn’t be pre-recorded

The lesson many have learned is that AI voices work best as a complement to traditional voice acting, not a complete replacement.

Mobile Game Voice Integration Case Studies

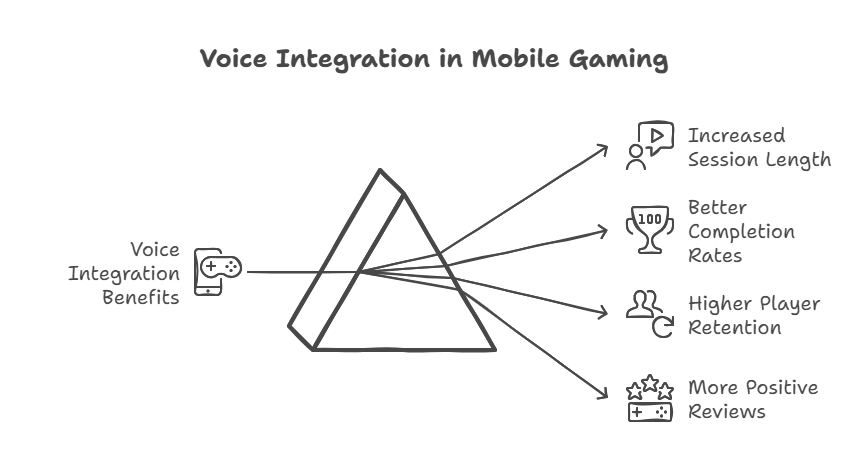

On mobile, voice integration has shown some clear benefits:

- Increased session length when tutorials are voiced

- Better completion rates for complex quests

- Higher player retention when characters have voices

- More positive reviews mentioning immersion and character connection

Mobile developers have learned to be strategic about voice use, focusing on key moments rather than voicing everything, to manage file size and performance.

Conclusion and Implementation Checklist

Key Takeaways for Successful Implementation

To make the most of AI text to speech for video games:

- Start small and expand – voice your most important characters first

- Test voices early in development to catch integration issues

- Be consistent with voice assignment and customization

- Use emotion and context to make voices more engaging

- Consider accessibility from the beginning

- Balance quality with performance requirements

Remember that even simple voice implementation can dramatically improve how players experience your game.

Step-by-Step Implementation Roadmap

Here’s a simple checklist to follow:

- Evaluate your game’s needs and budget

- Research and select an AI voice provider

- Set up the technical integration

- Create voice profiles for main characters

- Test with sample dialogue

- Implement in one section of your game

- Gather feedback and adjust

- Roll out to the rest of your game

- Optimize performance

- Plan for updates and expansions

This methodical approach helps avoid common pitfalls and ensures a smooth implementation.

Resources for Continued Learning and Support

To keep improving your game voice generation skills:

- Join game developer communities focused on narrative and audio

- Follow AI voice companies for updates on new features

- Participate in game jams to experiment with voice in small projects

- Share your experiences with other developers

- Stay informed about advances in voice technology

The field is moving quickly, and staying connected helps you make the most of new opportunities as they emerge.

With these tools and approaches, developers of all sizes can now bring their game worlds to life through voice. The days of silent NPCs or text-only dialogue are coming to an end, as AI text to speech for video games makes voice content accessible to everyone.

Sources

https://dev.to/edenai/how-to-use-text-to-speech-in-unity-3c9f

https://learn.microsoft.com/en-us/azure/ai-services/speech-service/gaming-concepts

https://www.veed.io/tools/voice-over-generator/voice-for-games